Introduction

Policymakers, experts and advocates have promoted many different types of education reform over the past few decades, but what is the evidence about the efficacy of these programs? EdChoice partnered with Hanover Research to find out what research has been conducted in nine major education reform areas of interest:

- class size (small classes)

- common enrollment applications/unified enrollment systems

- open enrollment (inter-/intra-district)

- portfolio management

- pre-kindergarten

- private school choice

- public charter schools

- school size (small schools)

- school takeover

We want to be clear: We reviewed experimental research on these nine education reform areas not to say one reform is “better than another,” but to report that we know more or less about certain reforms’ effects compared to others based on the volume of existing and reviewed experiments. Our goal in presenting this research is not to compare these reforms or to promote one improvement approach over another. We wanted to find out what has been rigorously studied and where there are needs and opportunities for high-quality empirical research.

The best methodology available to researchers for generating “apples-to-apples” comparisons is a randomized control trial (RCT), which researchers also refer to as random assignment studies or experimental studies. Essentially, these studies conduct experiments—with treatment and control comparisons—and are widely considered to be the “gold standard” of research methods. We prefer evaluating school choice programs and other education reforms based on experiments and limit the scope of this review to assessing only RCTs.

For this summary we review RCTs only—not studies using other methods—because experiments comparing treatment and control groups allow researchers to identify reform/policy/program effects while minimizing bias from unobservable factors. Researchers conducting RCT studies can report unbiased estimates of effects based on two different comparisons:

- Intent-to-treat (ITT) effects, which compares outcomes between students who won the lottery and students who did not win the lottery. ITT is the estimated effect of being chosen for treatment via randomization.

- Treatment-on-the-treated (TOT) effects, which compares differences in outcomes between students who attended a private school and students who did not attend private school, regardless of their lottery outcome. TOT is the estimated effect of enrolling or participating in a given reform/policy/program, hence receiving the treatment.

When random assignment is not possible, some researchers use statistical techniques to approximate randomization. These studies are sometimes referred to as quasi-experimental or non-experimental studies. All research methods, including RCT, have tradeoffs. While RCTs generally have very high internal validity because of their ability to control for unobservable factors (e.g., student and parent motivation), they do not necessarily provide very high (or low) external validity.

We set out to make it easier for policymakers and other K–12 stakeholders to determine not just what research has been conducted, but where, when and how many times a given reform has been studied via experiments. The studies reviewed for this brief have very strong “internal validity”—meaning we can be confident that the observed effects are attributed to the program itself and no other factors, and at that time and in that location. However, we advise caution about the “external validity” of the reviewed research, and more generally, all empirical research on education reforms. That is to say: Study results may or may not be generalizable to other students served by other programs or reforms.

Our overall punchline is not new, but we believe it is still very important: We need more high-quality research in all of these reform areas. As policymakers and advocates continue to innovate and implement new programs aimed at fostering K–12 student success, we must continue to set goals and study the outcomes so that we can determine whether we are, in fact, succeeding.

EdChoice is a research-based advocacy organization. We take empirical research seriously while also acknowledging there are plenty of limitations. We believe that we must continue to expand educational opportunity so that all families have access to schooling options—regardless of type or sector—that work for their children. In this brief, we review and summarize the experimental research to date. We look forward in our research capacity to continue gathering information and sharing it with policymakers, partners and other researchers.

Key Findings

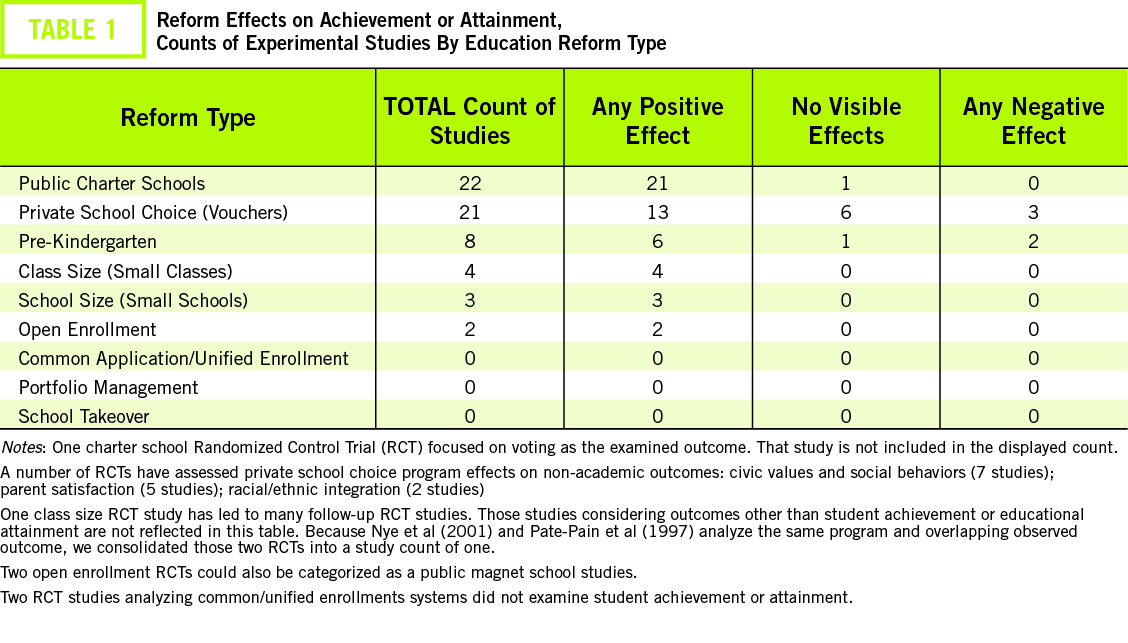

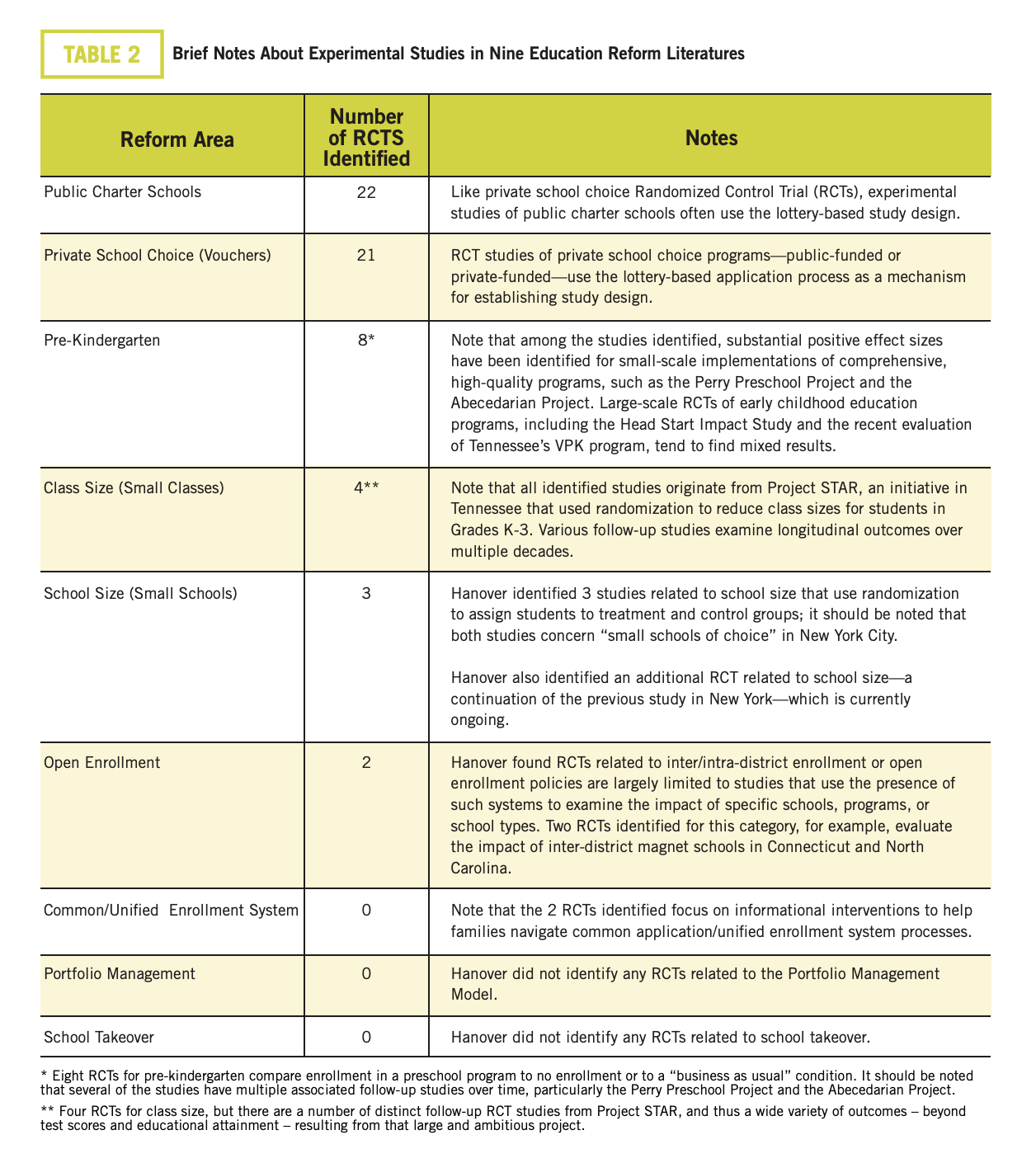

Public Charter Schools—Hanover Research identified 22 RCT studies of public charter schools that considered impacts on student achievement or attainment. Regulations affecting public charter schools tend to require over-subscribed charters to conduct a random lottery for enrollment offers among all applicants. Therefore, charter schools provide an interesting opportunity for quasi-experimental research that leverages this random assignment to explore differential outcomes for students who win compared to students who do not. In general, the large-scale studies that examine charter schools as a complete sector find that the average charter school does not have a significantly different impact on student achievement outcomes than an average traditional public school. However, over the years, a number of highly successful charter schools and networks have emerged that produce large, consistent gains in student achievement in math, and sometimes in reading. A growing body of lottery-based studies also find that charter school enrollment can have a positive impact on longer-term outcomes, such as college enrollment and quality of postsecondary institution. These benefits are particularly salient among disadvantaged students and in urban centers, including New York, Boston, and Chicago.

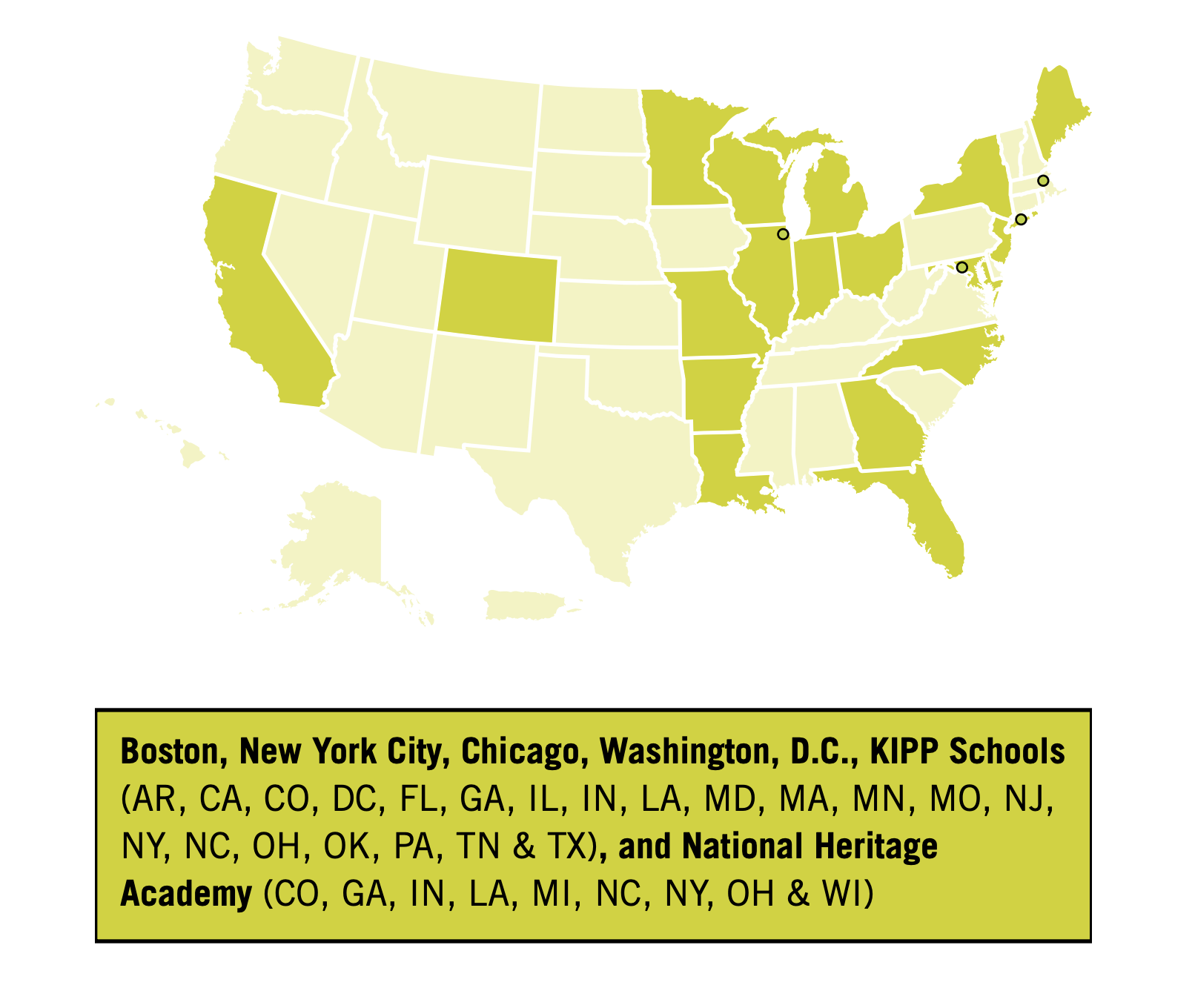

Private School Choice (Vouchers)—EdChoice has identified 21 experimental studies reporting the effects of private school choice (voucher) programs on participating students’ test scores and educational attainment. Seventeen RCT studies have examined the effects of private school choice (voucher) programs on participating students’ test scores. In recent years a growing body of experimental research—four studies—considered attainment outcomes such as high school graduation, college entrance, or college completion. Seventeen RCT studies analyzed participant test scores: 11 have found positive outcomes for either the full sample or at least one subsample of students studied; four found no visible effect for any group of students, and three found negative outcomes for all or some group of students. This body of research has studied three public-funded voucher programs and five private-funded scholarship programs across five states and the District of Columbia. Four RCT studies examined private school choice program participants’ educational attainment: two have found positive outcomes for either the full sample or at least one subsample of students studied, and two studies found no visible effect for any group of students. None of these studies have found negative educational attainment outcomes for all or some students. These studies focused on two public-funded voucher programs and one private-funded scholarship program across two states and the District of Columbia.

Pre-Kindergarten—Hanover’s review finds eight RCTs related to Pre-Kindergarten or Head Start. Among these eight studies, three examine the impact of high-quality, very small-scale programs, generally including less than 200 students total. The remaining five studies examine larger-scale early childhood education (ECE) programs. Across the body of research, small-scale studies generally find stronger, positive results of ECE program participation than larger scale studies. Although a recent evaluation of a statewide program in North Carolina finds a positive effect of participation on students’ early literacy skills, evaluations of both Head Start (national sample) and Tennessee VPK that follow students through Grade 3 find that most, if not all, significant benefits of early childhood education participation fade by Grade 3. However, a follow-up study of Tennessee VPK finds that the effects of the program vary by neighborhood, with a significant positive effect on Grade 3 reading achievement in high-poverty neighborhoods and a significantly negative effect in low-poverty neighborhoods. Findings from large-scale RCTs suggest that high-quality programming is difficult to scale while maintaining benefits for students. Alternatively, the impacts of large-scale studies of Pre-K may be diluted if many children in the control group attend high-quality ECE programs selected by their parents, which they are free to do in our cited studies.

Class Size—Hanover identified four RCT studies related to class size—the Tennessee STAR Project—although there have been follow-up experimental studies over multiple decades. This study found positive effects on standardized test performance for students who were assigned to a small class (defined as 13 to 17 students) in Grades K-3 compared to students assigned to regular class sizes (defined as 22 to 25 students) in the same grades, either with or without a teacher aide assigned to the classroom. The studies reviewed for this report find that the benefit of small class sizes in the early grades persists even after all students were returned to regular class sizes at Grade 4, through at least Grade 8, with larger benefits observed for minority students than white students, and for students who attended a small class across all four years (Grades K-3) than those who did not. At least four studies have continued to follow the Project STAR students into high school and adulthood, finding very small but significant differences in educational outcomes favoring small-class students, including likelihood to take a college entrance exam and likelihood of college enrollment by age 20.

School Size—Hanover identified three RCT studies related to school size. It should be noted that these are relatively small-scale experiments and examine the impact of “small schools of choice”—meaning that the size of the school is likely not the only factor that makes treatment schools different from control schools in these studies. Further, all three studies take place in New York City and thus, may not be generalizable to a broader setting. Despite these limitations, the three studies find that students who attend a small school of choice are more likely to graduate high school on time (within four years), and tend to accumulate more credits at each grade level than their counterparts in other high schools.

Open Enrollment (Inter-/Intra-District)—A review of the research surrounding inter-/intra-district enrollment did not identify any RCT studies that compared districts implementing such policies with districts that do not. Accordingly, this review expanded our search criteria to include studies that use inter- and intra-district enrollment policies to assess the impact of specific schools or programs. Hanover identified two RCTs related to inter-/intra-district open enrollment policies specific to public magnet programs: A 2009 study of Connecticut’s inter-district magnet schools conducted by Bifulco, Cobb, and Bell. The study found significant positive impacts on student achievement on standardized exams in math (0.14 standard deviations) and literacy (0.28 standard deviations) at Grade 8 after enrolling in the inter-district program in Grade 6 or later. A recent RCT studied North Carolina’s early college programs in public magnet schools and found they significantly improved college enrollment and completion, with effects concentrated on enrollment in two-year colleges.

Common Enrollment/Unified Enrollment Systems—No RCT studies examine common/unified enrollment system effects on student achievement or attainment. However, our review identified two recent RCTs that used informational and cue-to-action interventions to support students and parents navigating the school choice process. A 2018 study of Grade 8 students in New York found that a simple informational intervention could improve the quality of high schools students to which students applied and were “matched,” while a 2019 study targeting parents of young children in New Orleans found that a text message-based informational intervention increased the likelihood that parents would apply and ultimately enroll their child in free, public early childhood education programs offered in the city. A review of the research surrounding common enrollment applications and unified enrollment systems did not identify any RCTs that compared such a system to a “business as usual” school choice approach.

Hanover Research did not identify any relevant RCT studies for Portfolio Management or School Takeover. For both of these reform areas, the lack of experiments is likely due to a combination of the logistical and legal/ethical challenges with randomizing education these types of educational reforms. Further, Portfolio Management is a relatively new and somewhat poorly defined reform, and as such is an emerging area of interest for researchers.

Overview

EdChoice has partnered with Hanover Research to explore the research surrounding the organization’s eight major education reform areas of interest: class size (small classes); pre-kindergarten; public charter schools; common enrollment applications/unified enrollment systems; open enrollment (inter-/intra-district); school size (small schools); portfolio management; and school takeover. The objective of this review is to identify the impact of each of these school reforms on student outcomes. Accordingly, this review of the empirical research is restricted to experimental studies—randomized-controlled trials (RCTs), considered the “gold standard” methodology for demonstrating causation and intervention impact.

EdChoice also provides a summary of the experimental research on private school choice programs (vouchers). Each year our organization publishes an up-to-date review of seven outcomes of private school choice programs in a publication titled The 123s of School Choice.

For this brief we share our assessment of the RCT studies only examining two types of outcomes, either participating students’ achievement or measures of educational attainment. Hanover conducted searches for relevant RCTs in May 2019. Each section provides a high-level summary of findings from the body of research. More in-depth summaries of the specific studies’ findings can be accessed in a supplement to this brief posted online at [URL HERE]. In that supplemental and technical report, when applicable, Hanover includes findings from the U.S. Department of Education’s What Works Clearinghouse (WWC), which reviews individual education research studies for quality, impact, and implications.

Public Charter Schools

Findings

Hanover Research identified 22 RCT studies of public charter schools that report effects on students’ achievement or educational attainment. Several small-scale studies of high-profile charter schools or charter networks, such as the KIPP Network or Promise Academy in the Harlem Children’s Zone, have demonstrated consistent, significant, and strong effects of charter enrollment on student test scores in reading and math. However, several large, national and/or state studies of charter school enrollment as a whole find more lackluster results; in a national sample of 36 charter middle schools, Gleason et al found no significant differences in student achievement between lottery-winners and lottery-losers (2010). A 2016 analysis of lottery-based charter school studies spanning more than 100 schools conducted by Cohodes et al found significant but very small benefits for students who won charter school lotteries, generally less than a third of the impact on test scores observed for some of the highest-performing individual charter schools. A recent analysis of postsecondary outcomes for students enrolled in KIPP middle schools finds a positive effect on enrollment in college, but statistically insignificant effects on persistence through the first four semesters of college.

In recent years, lottery-based studies of charter schools have increasingly focused on not only exploring whether or not charter schools improve student achievement, but which charter schools improve student achievement, as well as the common elements of successful charters. Multiple studies find evidence for the benefits of the “No Excuses” charter school model in urban settings like Boston and Chicago (see, for example, Abdulkadiroğlu, et al, 2011, Davis and Heller, 2017, and Angrist, Pathak, and Walters, 2013). However, some research suggests that test score improvements associated with “No Excuses” charter schools can be explained by other factors related to school setting (Chabrier, Cohodes, & Oreopoulos, 2016). For example, Dobbie and Fryer’s 2013 study of charter schools in New York City identify several key elements associated with the highest-performing charters in the city, including setting high expectations, using data-based instruction, providing frequent teacher feedback, offering high-dosage tutoring, and securing increased instructional time. Emerging research from Boston Public Schools finds that the highest-performing charter schools in the city have successfully expanded their programs, while maintaining quality and achieving comparable gains in student achievement in their new campuses and locations, when given the opportunity to do so (Cohodes, Setren, & Walters, 2019).

Over the years, a number of highly successful charter schools and networks have emerged that produce large, consistent gains in student achievement in math, and sometimes in reading. A small but growing body of lottery-based studies also find that charter school enrollment can have a positive impact on longer-term outcomes, such as college enrollment and quality of postsecondary institution. These benefits are particularly salient in urban centers, including New York, Boston, and Chicago, and among disadvantaged students. In recent years, researchers are beginning to focus on identifying the key elements of effective charter schools and exploring means for replication…

Context

Over the last several decades, multiple methodological approaches have been used to try to capture the impact of public charter schools on student outcomes. In general, the randomized controlled trial remains the “gold standard” methodology and is a good fit for evaluating charter schools in some cases, such as when the demand for enrollment at an individual charter school exceeds the number of seats available and the school uses a random lottery to award seats to the applicant pool. However, because this methodology can only be employed when individual schools experience over-enrollment, such studies may only represent the most popular and in-demand schools, calling into question whether results are generalizable across the charter school sector.

Regulations affecting public charter schools tend to require over-subscribed charters to conduct a random lottery for enrollment offers among all applicants. Therefore, charter schools provide an interesting opportunity for quasi-experimental research that leverages this random assignment to explore differential outcomes for students who win compared to students who do not. In general, large-scale studies that examine charter schools as a complete sector find that the average charter school does not have a significantly different impact on student achievement outcomes than an average traditional public school. However, it is important to note that randomization (lotteries) occur at the school level, so experimental studies of charter schools are typically evaluations of specific charter schools, and not the charter law or policy per se or of all charter schools in that jurisdiction.

In recent years, there have been several high-quality reviews of the empirical research on public charter schools, and we recommend them for additional understanding and context.1

Private School Choice (Vouchers)

Findings

EdChoice has identified 21 experimental studies reporting the effects of private school choice (voucher) programs on participating students’ test scores and educational attainment. Seventeen RCT studies have examined the effects of private school choice (voucher) programs on participating students’ test scores. In recent years a growing body of experimental research – four studies – considered attainment outcomes such as high school graduation, college entrance, or college completion.

- 17 RCT studies analyzed participant test scores: 11 have found positive outcomes for either the full sample or at least one subsample of students studied; four found no visible effect for any group of students, and three found negative outcomes for all or some group of students. This body of research has studied three public-funded voucher programs and five privately funded scholarship programs across five states and the District of Columbia.

- 4 RCT studies examined private school choice program participants’ educational attainment: two have found positive outcomes for either the full sample or at least one subsample of students studied, and two studies found no visible effect for any group of students. None of these studies have found negative educational attainment outcomes for all or some students. These studies focused on two public-funded voucher programs and one privately funded scholarship program across two states and the District of Columbia.

Context

Do students get better test scores after receiving private school vouchers? For more than a decade EdChoice has tracked and reviewed experimental studies that reveal whether students who won a lottery and/or used scholarships to attend a private school of their choice achieved higher test scores than students who applied for but did not receive or use scholarships.

Researchers have studied the effects that programs have on participating students’ test scores. About one-third of these studies comprise analyses on a privately funded voucher program in New York City. The Louisiana Scholarship Program (LSP) has been the only statewide voucher program studied experimentally. All other RCTs have been of voucher or scholarship programs limited to cities, including Milwaukee, Charlotte, Cleveland, Dayton, New York City, and Toledo.

Longitudinal evaluations of the LSP and District of Columbia Opportunity Scholarship Program concluded in 2019. The D.C. evaluation did not detect any significant impact from the program on test scores by participants or any subgroup of students after three years in the program. The LSP evaluation found statistically significant negative effects on participant test scores in math and reading. Reports on the first couple years of the LSP were the first studies to find negative effects from a private school voucher program. While the gap in test scores between participants and non-participants narrowed during the second and third years of the program, the gap slightly increased during the fourth year.

EdChoice has also reviewed studies considering whether students who won a lottery or used scholarships to attend a private school of their choice were more likely to graduate from high school, more likely to enroll in college and/or more likely to persist in college than students who did not use scholarships.2

Pre-Kindergarten

Findings

A review of the research surrounding Pre-Kindergarten identified eight RCTs studies, although some distinct studies are follow-up research on earlier programs. Among these eight studies, three examine the impact of high-quality, very small-scale programs, generally including less than 200 students total. The remaining five studies examine larger-scale early childhood education (ECE) programs.

Across the body of research, small-scale studies generally find stronger, positive results of ECE program participation than larger scale studies. Although a recent evaluation of a statewide program in North Carolina finds a positive effect of participation on students’ early literacy skills, evaluations of both Head Start (national sample) and Tennessee VPK that follow students through Grade 3 find that most, if not all, significant benefits of early childhood education participation fade by Grade 3. However, a follow-up study of Tennessee VPK finds that the effects of the program vary by neighborhood, with a significant positive effect on Grade 3 reading achievement in high-poverty neighborhoods and a significantly negative effect in low-poverty neighborhoods.

Findings from large-scale RCTs suggest that high-quality programming is difficult to scale while maintaining benefits for students. Alternatively, as previously noted, the impacts of large-scale studies of Pre-K may be diluted if many children in the control group attend high-quality early childhood programs selected by their parents, which they are free to do in all studies noted in this report.

Context

The research on the long-lasting positive effects of early childhood education programs tends to draw from a few, high-quality, small-scale, and rigorously evaluated studies, such as at the Abecedarian Project in North Carolina and the High/Scope Perry Preschool Project in Michigan. Some researchers, including Russ Whitehurst at Brookings, argue that the Abecedarian Project was unusually supportive of children and families and cannot be simply equated to services that are typically available to 3- and 4-year-old children as “pre-kindergarten” or “childcare” today.3 Furthermore, there are very few randomized controlled trial studies of pre-kindergarten programs that have been implemented at scale—such as at the state, county, or district level. A 2017 systematic review of pre-kindergarten programs identified just one RCT study of a “scaled-up” pre-kindergarten program.4 An even more recent review published by Learning Policy Institute, which examined effect sizes across more than a dozen studies of pre-kindergarten programs, identified just two randomized controlled trials, each with one or more follow-up studies: the Head Start Impact Study and the Tennessee VPK Study.5 Dale Farran and Mark Lipsey provide further context on recent interpretations of the body of empirical research on pre-kindergarten.6

For this review, Hanover focused on identifying studies that randomly assigned young children to attend or not attend a selected pre-kindergarten, preschool, or other early childhood education program. It should be noted that several of the studies confirm that although children in the control group were not selected to enroll in the early childhood education program of interest for the study, many do enroll in some form of non-home-based care. Accordingly, WWC’s review of two of the studies listed below compare children’s enrollment in the program of interest, such as Head Start or the Abecedarian Project, with “business as usual,” which typically includes control group children who may or may not be enrolled in another form of pre-kindergarten or childcare. Thus, these are studies of the effectiveness of specific program models, rather than a pure study of the impact of pre-kindergarten compared to no early schooling.

Class Size

Findings

The STAR project randomized more than 7,000 students in Grades K-3 and their teachers into three treatment categories: small classes (13-17 students), regular classes with a teacher’s aide (22-25 students), and regular classes without a teacher’s aide (i.e., the status quo of 22-25 students). The initial study, published in the early 1990s, found that Kindergarten students in small classes achieved significantly higher mean test scores across multiple measures of achievement, including math, sounds/letters, words/sentences, and total reading at follow-up than students in regular classes, both with and without a teacher aide. Alternately, some evidence in the study suggests a higher mean score on self-concept and motivation for students in regular-sized classes over those in small classes.

Follow-up studies conducted since 1990 have found a examined the original data and various follow-up data points in multiple ways, with many studies finding evidence for lasting effects of small class sizes in the early grades. In 2001, Nye, Hedges, Konstantopoulos found that students who were in a small class size for at least one year during Grades K-3 outperformed students in regular-sized classes across Grades K-3 on a standardized math exam in Grade 9 by 0.146 standard deviations. Further, students who were in small classes for all years throughout Grades K-3 outperformed students in regular class sizes on the same Grade 9 math exam by 0.340 standard deviations. Effect sizes were higher for minority students than for white students, suggesting that some subpopulations may benefit from reduced class sizes more than others. Similarly, Krueger and Whitmore’s 2001 study found that while the performance benefits of small class size in Grades K-3 appeared to diminish over time after Grade 3, a persistent and positive effect could be observed on standardized exam scores through at least Grade 8. Furthermore, students assigned to small class sizes were slightly more likely to take a college entrance exam (SAT or ACT) in high school.

Other studies examined long-term outcomes for the Project STAR students over time, including high school dropout rates (Pate-Pain et al., 1997) and college attendance, income at age 27, home ownership, and retirement savings (Chetty, et al, 2011). These studies find very small, but significant, differences in these broader adult outcomes in favor of students assigned to small classes in Grades K-3. For instance, Chetty et al finds that students in small classes were 1.8 percentage points more likely to be enrolled in college at age 20 compared to other students in the study.

Context

It should be noted that the Tennessee-based Student/Teacher Achievement Ratio Project (Project STAR)—which began in the mid-1980s—represents the most substantial source of evidence for the benefits of reduced class sizes. Project STAR used randomization to assign teachers and students to treatment and control conditions, and ultimately included about 7,000 students in Grades K-3.7 To date, this is the only randomized controlled trial Hanover has identified related to class size in the United States. The figure below presents the original study and a selection of notable follow-up research, which has continued to follow the original students through at least 2011. Note that an ERIC search reveals more than 100 studies related to the Project STAR datasets, although not all related studies will be randomized controlled studies.8 Nearly a decade ago Chingos and Whitehurst (2011) also reviewed the high-quality empirical research up to that time, which included non-RCT studies, and may also be of interest to readers.9

School Size

Findings

As previously noted, all three RCT studies identified for this review consider the same general group of small high schools in New York City. The schools under consideration span Grades 9 through 12 and are purposefully small, tending to enroll around 100 students per grade level. All schools are considered “schools of choice,” because students must apply through the City’s centralized high school enrollment system. However, the schools are non-selective, meaning that there are no entry requirements, and that any of the schools that are over-subscribed are assigned at random through a lottery. Accordingly, all three studies leverage this admissions process to compare lottery winners who enroll in a small school to lottery losers who enroll in another school option, which, theoretically, minimizes differences in the treatment and comparison groups.

As shown in the figure on the following page, all three studies suggest positive results associated with small schools of choice, including improved 4-year graduation rates and increased credit accumulation (Bloom, Thompson, and Unterman (2010), Bloom and Unterman (2013), and Abdulkadiroğlu, Hu, and Pathak (2013)). The 2013 study by Abdulkadiroğlu Hu, and Pathak examined a variety of additional student outcomes, including standardized test scores on New York State’s Regents exams, attendance, college entrance exam scores (PSAT/SAT), and postsecondary outcomes.

Context

Hanover reviewed the literature surrounding school size and identified a small number of studies that use randomization in their methodology for examining school size as a treatment factor; it should be noted that all three studies that place in the same—and somewhat unique—setting: New York City Public Schools.

It should also be noted that a review of the research on the effect of high school size conducted by Linda Darling-Hammond, Peter Ross, and Michael Milliken identified the “lack of randomized trials, scarcity of controlled comparison group designs, inattention in many correlational studies to selection effects, size values, and nonlinear relationships, and absence of modeling that takes multiple levels of variables into account” as obstacles to examining and estimating the true effects of school size.10

Open Enrollment

Findings

Hanover Research identified two RCT studies on open enrollment: one examining Connecticut’s inter-district magnet schools and another looking at North Carolina’s early college programs. The latter study of early college programs finds that these programs significantly improved college enrollment and completion, with effects concentrated on enrollment in two-year colleges. Connecticut’s inter-district magnets were created as an explicit strategy to reduce segregation in the state’s public school systems and exist in three Connecticut locations: Hartford, Waterbury, and New Haven. This study leverages the random lottery admission policy to compare outcomes for students who win the admission lottery and enroll in the school and to those who lose the admission lottery and do not enroll. Within the study, authors conclude that there are some statistically significant benefits to inter-district magnet attendance for lottery-winners compared to those who entered the lottery but did not win on standardized assessments in math (0.14 standard deviations) and literacy (0.28 standard deviations) at Grade 8.

Context

Hanover was unable to identify any RCTs focused specifically on “intra-district enrollment,” “inter-district enrollment,” or “open enrollment” at broad scale. Rather, there are a number of studies that use the presence of such systems—particularly those that include elements of randomization—to examine the impact of specific types of schools, such as public charters or magnet schools. We have examined one RCT studying the impact of Connecticut’s inter-district magnet schools as well as a recent experimental study examining postsecondary outcomes for students enrolled in magnet programs using an early college model in North Carolina.

Common Application / Unified Enrollment System

Findings

Hanover identified two RCTs related to informational interventions to help students and parents navigate complex school choice enrollment systems. First, a 2018 study of more than 19,000 middle school students in New York City Public Schools randomized an informational intervention to help Grade 8 students identify high-performing high school options in their geographic area across 165 middle schools. The study found that a simple list of school choices, ranked by graduation rate, alongside information about the logistics of the application process and relevant public transportation information, was able to change the high school application behavior of Grade 8 students and, ultimately, the quality of schools that they “matched” to through the school choice process. In 2019, Weixler et al found that parents of young children who received text message-based outreach were more likely to complete the verification process to enroll their child in free, public early childhood education (ECE) programs in New Orleans. Children of parents in the treatment groups were also more likely to be enrolled in ECE programs one year later.

Context

With increasing school choice options for parents and students in districts across the country, the logistical process of choosing a school has become more complex. Strategies like common enrollment applications and unified enrollment systems aim to streamline the school choice process. First, Hanover reviewed the research related to common enrollment application systems for K-12 education and did not identify randomized controlled trials on this topic. Second, Hanover reviewed the literature related to unified enrollment systems, considered the most evolved form of the common enrollment application system, as this type of system includes all schools in the district. As with common application systems, we did not identify any randomized controlled trials for unified enrollment systems. As of September 2018, just six school districts in the United States had implemented such a system, although another four cities were “considering or implementing unified enrollment systems.”11

An emerging area of RCT research related to common enrollment applications and unified enrollment systems appears to be providing informational interventions for families to help navigate the enrollment process, as identified in the table below.

Portfolio Management

No RCT Findings

Context

A review of the literature surrounding portfolio school district management did not identify any experimental studies based on randomized controlled trials. It is particularly challenging to conduct such a study of this approach. Portfolio management is a district level strategy, and it would be logistically challenging, if not impossible, to truly randomize students across district lines. The Center for Reinventing Public Education (CRPE)—an organization that advocates for the portfolio approach—describes the state of the research as follows:

“Studies of high-performing schools across the private, district, and charter sectors have identified empowered school leaders and coherent school designs as key drivers of their success. The portfolio strategy is about creating the policy and system conditions and incentives that would allow high-performing schools to thrive. Sustained student improvement outcomes in Chicago, Denver, Washington, D.C., and New York City, as well as promising early results in Camden, New Orleans, and Indianapolis, suggest that the portfolio strategy has the potential to foster thriving schools at scale across a city, rather than just in pockets.

That said, causal studies of the strategy are complex endeavors: the portfolio strategy is more than a single idea. It involves many different policies and actors working together, and may entail different interventions in different cities depending on the local context. In an upcoming project, CRPE hopes to engage a panel of experts to develop a research agenda and program to identify how well the key policies associated with the portfolio strategy positively impact educator job satisfaction, public engagement, and student learning.”12

Further, a recent study of the Portfolio Management Model’s (PMM) implementation in New Orleans, Louisiana—not a randomized controlled trial—remarks that the early research on this approach is still evolving:

“Although at least 45 cities have used the PMM (Center for Reinventing Public Education, 2016), the extant literature has been relatively silent on the effectiveness of the PMM to improve student outcomes (Harris & Larsen, 2016; Strunk, Marsh, Hashim, Bush, & Weinstein, 2016) or the variation in school quality among the educational options within a portfolio district (Berends & Waddington, 2014). To date, the majority of the research has focused on the design, implementation, and political aspects of portfolio districts (Bulkley, 2010; Hill et al., 2013; Hill et al., 2009).”13

School Takeover

No RCT Findings

Context

School takeover is a strategy that states districts may employ when an individual school is chronically underperforming. The strategy is most-often associated with the No Child Left Behind Act, under which school takeover by the state is one of several options that school districts may pursue when a school failed to meet performance requirements defined by the law for more than five years. Under the current federal education law—the Every Student Succeeds Act (ESSA)—school takeover as a mechanism for states to change the governance structure of underperforming schools after initial intervention and support is explicitly addressed in 25 state ESSA plans. However, it should be noted that “[a]pproaches vary in terms of degrees of control, timeline and progression of implementation, and level of detail provided.”

A review of the literature surrounding school takeover did not identify any randomized controlled trials. A 2005 series on different school turnaround strategies notes that “… there are no examples to date of districts that have voluntarily allowed the state to take over individual low-performing schools, there is no research base to indicate under what conditions this option would lead to improved academic outcomes for students or why a state would take this path.” This suggests that the school takeover strategy may only be used in particularly difficult and fraught cases, and that it may not be feasible, ethical, or legal to randomly assign school takeover as a strategy for chronically under-performing schools.

References

- Citations listed by reform type, in chronological order starting with most recent year, then alphabetical

- What Works Clearinghouse (WWC) reviews nested with corresponding study, as applicable

- For those reforms with little or no experimental research literature, we include other publications for context purposes

Private School Choice (Vouchers)

Albert Cheng, Matthew M. Chingos, and Paul E. Peterson (2019), Experimentally Estimated Impacts of School Voucher on Educational Attainments of Moderately and Severely Disadvantaged Students (EdWorkingPaper 19-76), retrieved from Annenberg Institute at Brown University: http://edworkingpapers.com/ai19-76

Matthew M. Chingos, Daniel Kuehn, Tomas Monarrez, Patrick J. Wolf, John F. Witte, and Brian Kisida (2019), The Effects of Means-Tested Private School Choice Programs on College Enrollment and Graduation, retrieved from Urban Institute website: https://www.urban.org/sites/default/files/publication/100665/the_effects_of_means-tested_private_school_choice_programs_on_college_enrollment_and_graduation_2.pdf

Heidi H. Erickson, Jonathan N. Mills, and Patrick J. Wolf (2019), The Effect of the Louisiana Scholarship Program on College Entrance (Louisiana Scholarship Program Evaluation Report 12), University of Arkansas, retrieved from https://dx.doi.org/10.2139/ssrn.3376236

Jonathan N. Mills and Patrick J. Wolf (2019), The Effects of the Louisiana Scholarship Program on Student Achievement After Four Years (Louisiana Scholarship Program Evaluation Report 10), University of Arkansas, retrieved from https://dx.doi.org/10.2139/ssrn.3376230

Ann Webber, Ning Rui, Roberta Garrison-Mogren, Robert B. Olsen, and Babette Gutmann (2019), Evaluation of the DC Opportunity Scholarship Program: Impacts Three Years After Students Applied (NCEE 2019-4006), retrieved from Institute of Education Sciences website: https://ies.ed.gov/ncee/pubs/20194006/pdf/20194006.pdf

Atila Abdulkadiroğlu, Parag A. Pathak, and Christopher R. Walters (2018), Free to Choose: Can School Choice Reduce Student Achievement? American Economic Journal: Applied Economics, 10(1), pp. 175–206, https://dx.doi.org/10.1257/app.20160634

Marianne Bitler, Thurston Domina, Emily Penner, and Hilary Hoynes (2015), Distributional Analysis in Educational Evaluation: A Case Study from the New York City Voucher Program, Journal of Research on Educational Effectiveness, 8(3), pp. 419–450, https://dx.doi.org/10.1080/19345747.2014.921259

Matthew M. Chingos and Paul E. Peterson (2015), Experimentally Estimated Impacts of School Vouchers on College Enrollment and Degree Attainment, Journal of Public Economics, 122, pp. 1–12, https://dx.doi.org/10.1016/j.jpubeco.2014.11.013

Patrick J. Wolf, Brian Kisida, Babette Gutmann, Michael Puma, Nada Eissa, and Lou Rizo (2013), School Vouchers and Student Outcomes: Experimental Evidence from Washington, DC, Journal of Policy Analysis and Management, 32(2), pp. 246–270, http://dx.doi.org/10.1002/pam.21691

Institute of Education Sciences, “WWC Review of Evaluation of the DC Opportunity Scholarship Program: Final Report (NCEE 2010-4018)” [Web page], retrieved from https://ies.ed.gov/ncee/wwc/Study/67304

Hui Jin, John Barnard, and Donald Rubin (2010), A Modified General Location Model for Noncompliance with Missing Data: Revisiting the New York City School Choice Scholarship Program using Principal Stratification, Journal of Educational and Behavioral Statistics, 35(2), pp. 154–173, https://dx.doi.org/10.3102/1076998609346968

Joshua Cowen (2008), School Choice as a Latent Variable: Estimating the “Complier Average Causal Effect” of Vouchers in Charlotte, Policy Studies Journal, 36(2), pp. 301–315, https://dx.doi.org/10.1111/j.1541-0072.2008.00268.x

Carlos Lamarche (2008), Private School Vouchers and Student Achievement: A Fixed Effects Quantile Regression Evaluation, Labour Economics, 15(4), pp. 575-590, https://doi.org/10.1016/j.labeco.2008.04.007

Eric Bettinger and Robert Slonim (2006), Using Experimental Economics to Measure the Effects of a Natural Educational Experiment on Altruism, Journal of Public Economics, 90(8–9), pp. 1625–1648, https://dx.doi.org/10.1016/j.jpubeco.2005.10.006

Alan Krueger and Pei Zhu (2004), Another Look at the New York City School Voucher Experiment, American Behavioral Scientist, 47(5), pp. 658–698, https://dx.doi.org/10.1177/0002764203260152

John Barnard, Constantine Frangakis, Jennifer Hill, and Donald Rubin (2003), Principal Stratification Approach to Broken Randomized Experiments: A Case Study of School Choice Vouchers in New York City, Journal of the American Statistical Association, 98(462), pp. 310–326, https://dx.doi.org/10.1198/016214503000071

William G. Howell, Patrick J. Wolf, David E. Campbell, and Paul E. Peterson (2002), School Vouchers and Academic Performance: Results from Three Randomized Field Trials, Journal of Policy Analysis and Management, 21(2), pp. 191-217, https://dx.doi.org/10.1002/pam.10023

Jay P. Greene (2001), Vouchers in Charlotte, Education Matters, 1(2), pp. 55–60, retrieved from Education Next website: http://educationnext.org/files/ednext20012_46b.pdf

Jay P. Greene, Paul Peterson, and Jiangtao Du (1999), Effectiveness of School Choice: The Milwaukee Experiment, Education and Urban Society, 31(2), pp. 190–213, https://dx.doi.org/10.1177/0013124599031002005

Cecilia E. Rouse (1998), Private School Vouchers and Student Achievement: An Evaluation of the Milwaukee Parental Choice Program, Quarterly Journal of Economics, 113(2), pp. 553–602, https://dx.doi.org/10.1162/003355398555685

Public Charter Schools

Thomas Coen, Ira Nichols-Barrer, and Philip Gleason (2019), An Impact that Lasts: KIPP Middle Schools Boost College Enrollment, Mathematica Policy Research, retrieved from: https://files.eric.ed.gov/fulltext/ED598693.pdf

Sarah Cohodes, Elizabeth Setren, and Christopher R. Walters (2019), Can Successful Schools Replicate? Scaling Up Boston’s Charter School Sector (NBER Working Paper 25796), retrieved from National Bureau of Economic Research website: https://www.nber.org/papers/w25796

Matthew Davis and Blake Heller (2019), “No Excuses Charter Schools and College Enrollment: New Evidence from a High School Network in Chicago,” Education Finance and Policy, 14(3), pp. 414–440, https://dx.doi.org/10.1162/edfp_a_00244

Kate Place and Philip Gleason(2019), Do Charter Middle Schools Improve Students’ College Outcomes? (NCEE 2019-4005), retrieved from Institute for Education Sciences website: https://ies.ed.gov/ncee/pubs/20194005/pdf/20194005.pdf

Susan Dynarski, Daniel Hubbard, Brian Jacob, and Silvia Robles (2018), Estimating the Effects of a Large For-Profit Charter School Operator (NBER Working Paper 24428), retrieved from National Bureau of Economic Research website: https://www.nber.org/papers/w24428

Brian Gill, Charles Tilley, Emilyn Whitesell, Mariel Finucane, Liz Potamites, and Sean Corcoran (2018), The Impact of Democracy Prep Public Schools on Civic Participation, retrieved from Mathematica Policy Research website: https://www.mathematica.org/our-publications-and-findings/publications/the-impact-of-democracy-prep-public-schools-on-civic-participation

Rebecca Unterman (2017), An Early Look at the Effects of Success Academy Charter Schools, MDRC, retrieved from: https://files.eric.ed.gov/fulltext/ED575961.pdf

Julia Chabrier, Sarah Cohodes, and Philip Oreopoulos (2016), What Can We Learn from Charter School Lotteries? (NBER Working Paper 22390), retrieved from National Bureau of Economic Research website:https://www.nber.org/papers/w22390.pdf

Will Dobbie and Roland G. Fryer (2015), “The Medium-Term Impacts of High-Achieving Charter Schools,” Journal of Political Economy, 123(5), pp. 985–1037, https://dx.doi.org/10.1086/682718

Christina C. Tuttle, Philip Gleason, Virginia Knecthel, Ira Nichols-Barrer, Keving Booker, Gregory Chojnack, . . . Lisbeth Goble (2015), Understanding the Effect of KIPP as it Scales: Volume I, Impacts on Achievement and Other Outcomes, Final Report of KIPP’s Investing in Innovation Grant Evaluation, Mathematica Policy Research, retrieved from: https://files.eric.ed.gov/fulltext/ED560079.pdf

Institute of Education Sciences, “WWC Review of Understanding the Effect of KIPP as it Scales: Volume I, Impacts on Achievement and Other Outcomes, Final Report of KIPP’s Investing in Innovation Grant Evaluation [Elementary school].” [Web page], retrieved from: https://ies.ed.gov/ncee/wwc/Study/85517

Institute of Education Sciences, “WWC Review of Understanding the Effect of KIPP as it Scales: Volume I, Impacts on Achievement and Other Outcomes, Final Report of KIPP’s Investing in Innovation Grant Evaluation [Middle School RCT].” [Web page], retrieved from: https://ies.ed.gov/ncee/wwc/Study/85538

Joshua D. Angrist, Sarah R. Cohodes, Susan M. Dynarski, Parag A. Pathak, and Christopher R. Walters (2014, March), Stand and Deliver: Effects of Boston’s Charter High Schools on College Preparation, Entry, and Choice, paper presented at the Society for Research on Educational Effectiveness’s Spring 2014 Conference, abstract retrieved from https://files.eric.ed.gov/fulltext/ED562936.pdf

Institute of Education Sciences, “WWC Review of Stand and Deliver: Effects of Boston’s Charter High Schools on College Preparation, Entry, and Choice” [Web page], retrieved from: https://ies.ed.gov/ncee/wwc/Study/77769

Vilsa E. Curto and Roland G. Fryer (2014), “Potential of Urban Boarding Schools for the Poor: Evidence from SEED,” Journal of Labor Economics, 32(1), pp. 65–93, http://www.jstor.org/stable/10.1086/671798 .

Joshua D. Angrist, Parag A. Pathak, and Christopher Walters (2013), “Explaining Charter School Effectiveness,” American Economic Journal: Applied Economics, 5(4), pp. 1–27, retrieved from Massachusetts Institute of Technology website: http://seii.mit.edu/wp-content/uploads/2012/12/Explaining-Charter-School-Effectiveness.pdf

Will Dobbie and Roland G. Fryer (2013), Medium-Term Impacts of High-Achieving Charter Schools on Non-Test Score Outcomes (NBER Working Paper 19851), retrieved from National Bureau of Economic Research website: https://www.nber.org/papers/w19581.pdf

Will Dobbie and Roland G. Fryer (2013) “Getting Beneath the Veil of Effective Schools: Evidence from New York City,” American Economic Journal: Applied Economics, 5(4), pp. 28–60, http://dx.doi.org/10.1257/app.5.4.28

Christina C. Tuttle, Brian Gill, Philip Gleason, Virginia Knechtel, Ira Nichols-Barrer, and Alexandra Resch, (2013), KIPP Middle Schools: Impacts on Achievement and Other Outcomes, Final Report Mathematica Policy Research, retrieved from https://files.eric.ed.gov/fulltext/ED540912.pdf

Institute of Education Sciences: “WWC Review of KIPP Middle Schools: Impacts on Achievement and Other Outcomes, Final Report,” [Web page], retrieved from https://ies.ed.gov/ncee/wwc/Study/77028

Atila Abdulkadiroğlu, Joshua D. Angrist, Susan M. Dynarski, Thomas J. Kane, and Parag A. Pathak (2011), “Accountability and Flexibility in Public Schools: Evidence from Boston’s Charters and Pilots,” Quarterly Journal of Economics, 126(2), pp. 699–748, https://dx.doi.org/10.1093/qje/qjr017

Will Dobbie and Roland G. Fryer (2011), ““Are High-Quality Schools Enough to Increase Achievement among the Poor? Evidence from the Harlem Children’s Zone,” American Economic Journal: Applied Economics, 3(3), pp. 158–187, http://dx.doi.org/10.1257/app.3.3.158

Joshua D. Angrist, Susan M. Dynarski, Thomas J. Kane, Parag A. Pathak, and Christopher R. Walters (2010), “Inputs and Impacts in Charter Schools: KIPP Lynn,” American Economic Review, 100(2), pp. 239–243, http://dx.doi.org/10.1257/aer.100.2.239

Philip Gleason, Melissa Clark, Christina C. Tuttle, Emily Dwoyer, and Marsha Silverberg (2010), The Evaluation of Charter School Impacts: Final Report (NCEE 2010-4029), National Center for Education Evaluation and Regional Assistance, retrieved from: https://files.eric.ed.gov/fulltext/ED510573.pdf

Institute of Education Sciences, “WWC Review of The Evaluation of Charter School Impacts: Final Report (NCEE 2010-4029),” retrieved from: https://ies.ed.gov/ncee/wwc/Study/67302

Will Dobbie and Roland G. Fryer (2009), Are High-Quality Schools Enough to Close the Achievement Gap? Evidence from a Social Experiment in Harlem (NBER Working Paper 15473), retrieved from National Bureau of Economic Research website: https://www.nber.org/papers/w15473.pdf

Institute of Education Sciences, “WWC Review of Are High-Quality Schools Enough to Close the Achievement Gap? Evidence from a Social Experiment in Harlem,” retrieved from: https://ies.ed.gov/ncee/wwc/Study/67283

Carolina M.Hoxby and Sonali Murarka (2009), Charter Schools in New York City: Who Enrolls and How They Affect Their Students’ Achievement (NBER Working Paper 14852), retrieved from National Bureau of Economic Research website: https://www.nber.org/papers/w14852.pdf

Caroline M. Hoxby and Jonah E. Rockoff (2004), The Impact of Charter Schools on Student Achievement (Unpublished paper), retrieved from https://www0.gsb.columbia.edu/faculty/jrockoff/hoxbyrockoffcharters.pdf

Pre-Kindergarten

Allison Atteberry, Daphna Bassok, and Vivian C. Wong (2019), “The Effects of Full-Day Prekindergarten: Experimental Evidence of Impacts on Children’s School Readiness,” Educational Evaluation and Policy Analysis, 41(4), pp. 537–562, https://dx.doi.org/10.3102/0162373719872197

Francis A. Pearman (2019), “The Moderating Effect of Neighborhood Poverty on Preschool Effectiveness: Evidence From the Tennessee Voluntary Prekindergarten Experiment,” American Educational Research Journal, advance online publication, https://dx.doi.org/10.3102%2F0002831219872977

Ellen Peisner-Feinberg, Sabrina Zadrozny, Laura Kuhn, and Karen Van Manen (2019), Effects of the North Carolina Pre-Kindergarten Program: Findings through Pre-K of a Small-Scale RCT Study¸ 2017-2018 Statewide Evaluation, University of North Carolina, retrieved from https://files.eric.ed.gov/fulltext/ED598158.pdf

Mark W. Lipsey, Dale C. Farran, and Kelley Durkin (2018), “Effects of the Tennessee Prekindergarten Program on Children’s Achievement and Behavior through Third Grade,” Early Childhood Research Quarterly, 45, pp. 155–176, https://dx.doi.org/10.1016/j.ecresq.2018.03.005

Jorge L. Garcia, James J. Heckman, Duncan E. Leaf, and Maria J. Prados (2016), The Life-Cycle Benefits of an Influential Early Childhood Program (NBER Working Paper 22993), retrieved from National Bureau of Economic Research website: https://www.nber.org/papers/w22993.pdf

Institute of Education Sciences, “WWC Review of The Life-Cycle Benefits of an Influential Early Childhood Program” [Web page], retrieved from https://ies.ed.gov/ncee/wwc/Study/84893

Mark W. Lipsey, Dale C. Farran, and Kerry G. Hofer (2015), A Randomized Control Trial of a Statewide Voluntary Prekindergarten Program on Children’s Skills and Behaviors through Third Grade, Peabody Research Institute, Vanderbilt University, retrieved from: https://files.eric.ed.gov/fulltext/ED566664.pdf

Michael Puma, Stephen Bell, Ronna Cook, Camilla Heid, Pam Broene, Frank Jenkins, . . . Jason Downer (2012), Third Grade Follow-Up to the Head Start Impact Study: Final Report, (OPRE Report 2012-45), Administration for Children and Families, U.S. Department of Health and Human Services, retrieved from: https://www.acf.hhs.gov/sites/default/files/opre/head_start_report_0.pdf

Lawrence J. Schweinhart (2010), “The High/Scope Perry Preschool Study: A Case Study in Random Assignment,” Evaluation and Research in Education, 14(3-4), pp. 136–147, https://dx.doi.org/10.1080/09500790008666969

Michael Puma, Stephen Bell, Ronna Cook, Camilla Heid, Gary Shapiro, Pam Broene, . . . Elizabeth Spier (2010), Head Start Impact Study: Final Report, Administration for Children and Families, U.S. Department of Health and Human Services, retrieved from: https://files.eric.ed.gov/fulltext/ED507845.pdf

Institute of Education Sciences, “WWC Review of Head Start Impact Study: Final Report” [Web page], retrieved from https://ies.ed.gov/ncee/wwc/Study/80434

Frances A. Campbell, Craig T. Ramey, Elizabeth Pungello, Joseph Sparling, and Shari Miller-Johnson (2002), “Early Childhood Education: Young Adult Outcomes from the Abecedarian Project,” Applied Developmental Science, 6(1), pp. 45–57, https://dx.doi.org/10.1207/S1532480XADS0601_05

David P. Weikart (1967), Preschool Intervention – A Preliminary Report of the Perry Preschool Project, Ypsilanti Public Schools, retrieved from: https://eric.ed.gov/?id=ED018251

School Size

Atial Abdulkadiroğlu, Weiwei Hu, and Parag A. Pathak (2013), Small High Schools and Student Achievement: Lottery-Based Evidence from New York City (NBER Working Paper 19576), retrieved from National Bureau of Economic Research website: http://www.nber.org/papers/w19576.pdf

Institute of Education Sciences, “WWC Review of Small High Schools and Student Achievement: Lottery-Based Evidence from New York City (NBER Working Paper 19576)” [Web page], retrieved from https://ies.ed.gov/ncee/wwc/Study/81467

Summary and data adapted from: Howard S. Bloom and Rebecca Unterman (2013), Sustained Progress: New Findings About the Effectiveness and Operation of Small Public High Schools of Choice in New York City, MRDC, retrieved from: https://files.eric.ed.gov/fulltext/ED545475.pdf

Institute of Education Sciences, “WWC Review of Sustained Progress: New Findings About the Effectiveness and Operation of Small Public High Schools of Choice in New York City” [Web page], retrieved from https://ies.ed.gov/ncee/wwc/Study/78538

Howard S. Bloom, Saskia L. Thompson, and Rebecca Unterman (2010), Transforming the High School Experience: How New York City’s New Small Schools Are Boosting Student Achievement and Graduation Rates, MRDC, retrieved from: https://files.eric.ed.gov/fulltext/ED511106.pdf

Institute of Education Sciences, “WWC Review of Transforming the High School Experience: How New York City’s New Small Schools Are Boosting Student Achievement and Graduation Rates” [Web page], retrieved from: https://ies.ed.gov/ncee/wwc/Study/78545

Common Application/Unified Enrollment System

Lindsay Weixler, Jon Valant, Daphna Bassok, Justin B. Doromal, and Alica Gerry (2018), Helping Parents Navigate the Early Childhood Enrollment Process: Experimental Evidence from New Orleans, Education Research Alliance for New Orleans, Tulane University, retrieved from: https://educationresearchalliancenola.org/files/publications/Weixler-et-al-ECE-RCT-6_7.pdf

Kevin Hesla (2018), Unified Enrollment: Lessons Learned from Across the Country, retrieved from National Alliance for Public Charter Schools website: https://www.publiccharters.org/sites/default/files/documents/2018-09/rd3_unified_enrollment_web.pdf

Sean P. Corcoran, Jennifer L. Jennings, Sarah R. Cohodes, and Carolyn Sattin-Bajaj (2018), Leveling the Playing Field for High School Choice: Results from a Field Experiment of Informational Interventions (NBER Working Paper 24471), retrieved from National Bureau of Economic Research website: https://www.nber.org/papers/w24471.pdf

Class Size

Raj Chetty, John N. Friedman, Nathaniel Hilger, Emmanual Saez, Diane W. Schanzenbach, and Danny Yagan (2010), How Does Your Kindergarten Classroom Affect Your Earnings? Evidence from Project STAR (NBER Working Paper 16381), retrieved from National Bureau of Economic Research website: https://www.nber.org/papers/w16381.pdf

Barbara Nye, Larry V. Hedges, and Spyros Konstantopoulos (2001), “The Long-Term Effects of Small Classes in Early Grades: Lasting Benefits in Mathematics Achievement at Grade 9,” Journal of Experimental Education, 69(3), pp. 245–257, https://dx.doi.org/10.1080/00220970109599487

Alan B. Krueger and Diane M. Whitmore (2001), “The Effect of Attending a Small Class in the Early Grades on College‐test Taking and Middle School Test Results: Evidence from Project Star,” The Economic Journal, 111(468), pp. 1–28, https://dx.doi.org/10.1111/1468-0297.00586

Helen Pate-Pain, Jayne Boyd-Zaharias, Van A. Cain, Elizabeth Word, and M. Edward Binkley (1997), STAR Follow-Up Studies, 1996-1997, HEROS, Inc. retrieved from: https://files.eric.ed.gov/fulltext/ED419593.pdf

Elizabeth Word, John Johnston, Helen Pate-Bain, B. DeWayne Fulton, Jayne Boyd-Zaharias, Charles M. Achilles, . . . Carolyn Breda (1990), The State of Tennessee’s Student/Teacher Achievement Ratio (STAR) Project: Technical Report, 1985-1990, Tennessee Department of Education, retrieved from: https://www.classsizematters.org/wp-content/uploads/2016/09/STAR-Technical-Report-Part-I.pdf

Open Enrollment

Mengli Song and Kristina L. Zeiser (2019), Early College, Continued Success: Longer-Term Impact of Early College High Schools, American Institutes for Research, retrieved from: https://files.eric.ed.gov/fulltext/ED602451.pdf

Data and summary taken from: Robert Bifulco, Casey D. Cobb, and Courtney Bell (2009), “Can Interdistrict Choice Boost Student Achievement? The Case of Connecticut’s Interdistrict Magnet School Program,” Educational Evaluation and Policy Analisys, 31(4), pp. 323–345, https://dx.doi.org/10.3102%2F0162373709340917

Institute of Education Sciences, “WWC Review of ‘Can Interdistrict Choice Boost Student Achievement? The Case of Connecticut’s Interdistrict Magnet School Program'” [Web page], retrieved from: https://ies.ed.gov/ncee/wwc/Study/81476

Gary Miron, Kevin G. Welner, Patricia H. Hinchey, and Alex Molnar (Eds.) (2008), School Choice: Evidence and Recommendations, retrieved from Great Lakes Center for Education Research and Practice website: https://greatlakescenter.org/docs/Research/2008charter/policy_briefs/all.pdf

School Takeover

Samantha Batel (2018, February 2), “Do ESSA Plans Show Promise for Improving Schools?,” retrieved from Center for American Progress website: https://www.americanprogress.org/issues/education-k-12/news/2018/02/02/445825/essa-plans-show-promise-improving-schools

Lucy M. Steiner, Julia M. Kowal, Matthew D. Arkin, Bryan C. Hassel, and Emily A. Hassel (2005), State Takeovers of Individual Schools, Learning Point Associates, retrieved from: https://files.eric.ed.gov/fulltext/ED489527.pdf

Portfolio Management

Center on Reinventing Public Education, “Frequently Asked Questions About the Portfolio Strategy” [Web page], retrieved from: https://www.crpe.org/content/portfolio-faq

Andrew J. McEachin, Richard O. Welsh, and Dominic J. Brewer (2016), “The Variation in Student Achievement and Behavior Within a Portfolio Management Model: Early results from New Orleans,” Educational Evaluation and Policy Analysis, 38(4), 2015, p. 669, https://dx.doi.org/10.3102/0162373716659928

Paul T. Hill (2006), Put Learning First: A Portfolio Approach to Public Schools (Progressive Policy Institute Policy Report), retrieved from: https://www.crpe.org/publications/put-learning-first-portfolio-approach-public-schools

Appendix

Study Inclusion Criteria

- A study must report intervention/reform effects on academic outcomes (e.g. math/reading skills, test scores) or education attainment outcomes (e.g. high school completion; college enrollment, persistence, completion).

- A study must employ randomized controls and have an experimental research design with control and treatment groups. Randomization provides the latter comparison groups that are, on average, equivalent on factors that are both observable (e.g., baseline test scores and gender) and unobservable (e.g., student’s and parent’s motivation). The only difference between the two comparison groups is exposure to the treatment, or reform. Thus, differences in measured outcomes between lottery winners and lottery losers can be attributed to the education intervention or reform rather than students’ background characteristics. Randomization gives us a high degree of confidence that these differences are due to intervention or reform (i.e. treatment).

- We consider multiple studies on the same program as unique if they study a different group of students or use substantially different statistical models or research methods. Several longitudinal evaluations have been conducted on private school choice programs or the STAR Project, with results reported periodically In these cases, we include the most recent evaluation. We exclude studies that were conducted by the same researchers and examining the same program and student populations.

List of Searched Databases, Sources

EBSCO Education EconLit ERIC Google Scholar JSTOR ProQuest Education WWC at the Institute for Education Sciences

Additional Resources

About EdChoice

EdChoice is a nonprofit, nonpartisan organization dedicated to advancing full and unencumbered educational choice as the best pathway to successful lives and a stronger society. EdChoice believes that families, not bureaucrats, are best equipped to make K–12 schooling decisions for their children. The organization works at the state level to educate diverse audiences, train advocates and engage policymakers on the benefits of high-quality school choice programs. EdChoice is the intellectual legacy of Milton and Rose D. Friedman, who founded the organization in 1996 as the Friedman Foundation for Educational Choice.

The contents of this publication are intended to provide empirical information and should not be construed as lobbying for any position related to any legislation.

About Hanover Research

Hanover Research is a brain trust designed to level the information playing field. We are hundreds of researchers who support thousands of organizational decisions every year. For more than 15 years, we have tailored insight to drive decisions. From strategic expansions into new markets, products, or programs to daily operations that delight customers, retain employees, and optimize revenue, Hanover’s team supports clients across the entire decision spectrum.

Our clients come in all shapes and sizes—from established global organizations, to emerging companies, to educational institutions. Their operating models are often lean, but their need for analytical rigor in decision making is equal to that of larger competitors. From CEOs and CMOs to Superintendents, Provosts, Chief Academic Officers and VPs of Finance, our research informs decisions at any level and across any department, capitalizing on our exposure to myriad industries and challenges. With time and money at a premium, they turn to Hanover to level the information playing field.

Endnotes

- Ron Zimmer, Richard Buddin, Sarah A. Smith, and Danielle Duffy (2019), Nearly Three Decades into the Charter School Movement, What Has Research Told Us about Charter Schools? (EdWorkingPaper 19-156), retrieved from Annenberg Institute for School Reform at Brown University: https://www.edworkingpapers. com/sites/default/files/ai19-156.pdf; Julian R. Betts and Y. Emily Tang (2014), A Meta-Analysis of the Literature on the Effect of Charter Schools on Student Achievement (CRPE Working Paper), retrieved from Center on Reinventing Public Education website: https://www.crpe.org/sites/default/files/CRPE_meta-analysis_charter-schools-effect-student-achievement_workingpaper.pdf

- EdChoice (forthcoming), The 123s of School Choice: What the Research Says about Private School Choice Programs in America, 2020 edition

- Grover J. Whitehurst (2017), Rigorous Preschool Research Illuminates Policy (and Why the Heckman Equation May Not compute) (Evidence Speaks), retrieved from Brookings Institution website: https://www.brookings.edu/research/rigorous-preschool-research-illuminates-policy-and-why-the-heckman-equation-may-not-compute

- Corey A. DeAngelis, Heidi H. Erickson, and Gary W. Ritter (2017), Is Pre-Kindergarten an Educational Panacea? A Systematic Review and Meta-Analysis of Scaled-Up Pre-Kindergarten in the United States (EDRE Working Paper 2017-08), University of Arkansas, retrieved from https://dx.doi.org/10.2139/ ssrn.2920635

- Beth Meloy, Madelyn Gardner, and Linda Darling-Hammond (2019), Untangling the Evidence on Preschool Effectiveness, retrieved from Learning Policy Institute website: https://learningpolicyinstitute.org/sites/default/files/product-files/Untangling_Evidence_Preschool_Effectiveness_REPORT.pdf

- Dale C. Farran and Mark W. Lipsey (2017, February 24), Misrepresented Evidence Doesn’t Serve Pre-K Programs Well [Blog post], retrieved from https:// www.brookings.edu/blog/education-plus-development/2017/02/24/misrepresented-evidence-doesnt-serve-pre-k-programs-well

- Harvard University, “Tennessee’s Student Teacher Achievement Ration (STAR) Project” [Web page], retrieved March 6, 2020 from https://dataverse.harvard.edu/dataset.xhtml?persistentId=hdl:1902.1/10766

- ERIC (2020), “Project STAR” [Collection search], retrieved from https://eric.ed.gov/?q=Project+STAR

- Grover J. Whitehurst and Matthew M. Chingos (2011), Class Size: What Research Says and What it Means for State Policy, retrieved from Brookings Institution website: https://www.brookings.edu/wp-content/uploads/2016/06/0511_class_size_whitehurst_chingos.pdf

- Linda Darling-Hammond, Peter Ross, and Michael Milliken (2007) High School Size, Organization, and Content: What Matters for Student Success? Brookings Papers on Education Policy, pp. 163–203, http://dx.doi.org/10.1353/pep.2007.0001

- Kevin Hesla (2018), Unified Enrollment: Lessons Learned from Across the Country, retrieved from National Alliance for Public Charter Schools website: https://www.publiccharters.org/sites/default/files/documents/2018-09/rd3_unified_enrollment_web.pdf

- Center on Reinventing Public Education, “Frequently Asked Questions About the Portfolio Strategy” [Web page], retrieved from https://www.crpe.org/ content/portfolio-faq

- Andrew J. McEachin, Richard O. Welsh, and Dominic J. Brewer (2016), “The Variation in Student Achievement and Behavior Within a Portfolio Management Model: Early results from New Orleans,” Educational Evaluation and Policy Analysis, 38(4), 2015, p. 669, https://dx.doi.org/10.3102/0162373716659928